LTI Authors Win Dual Best Paper Awards at EMNLP

LTI PhD students Simran Khanuja and William Chen, along with their co-authors, take two of five Best Paper awards at the prestigious conference

Language Technologies Institute researchers shined at the recent Conference on Empirical Methods in Natural Language Processing (EMNLP), where LTI authors earned two of the conference’s five Best Paper awards.

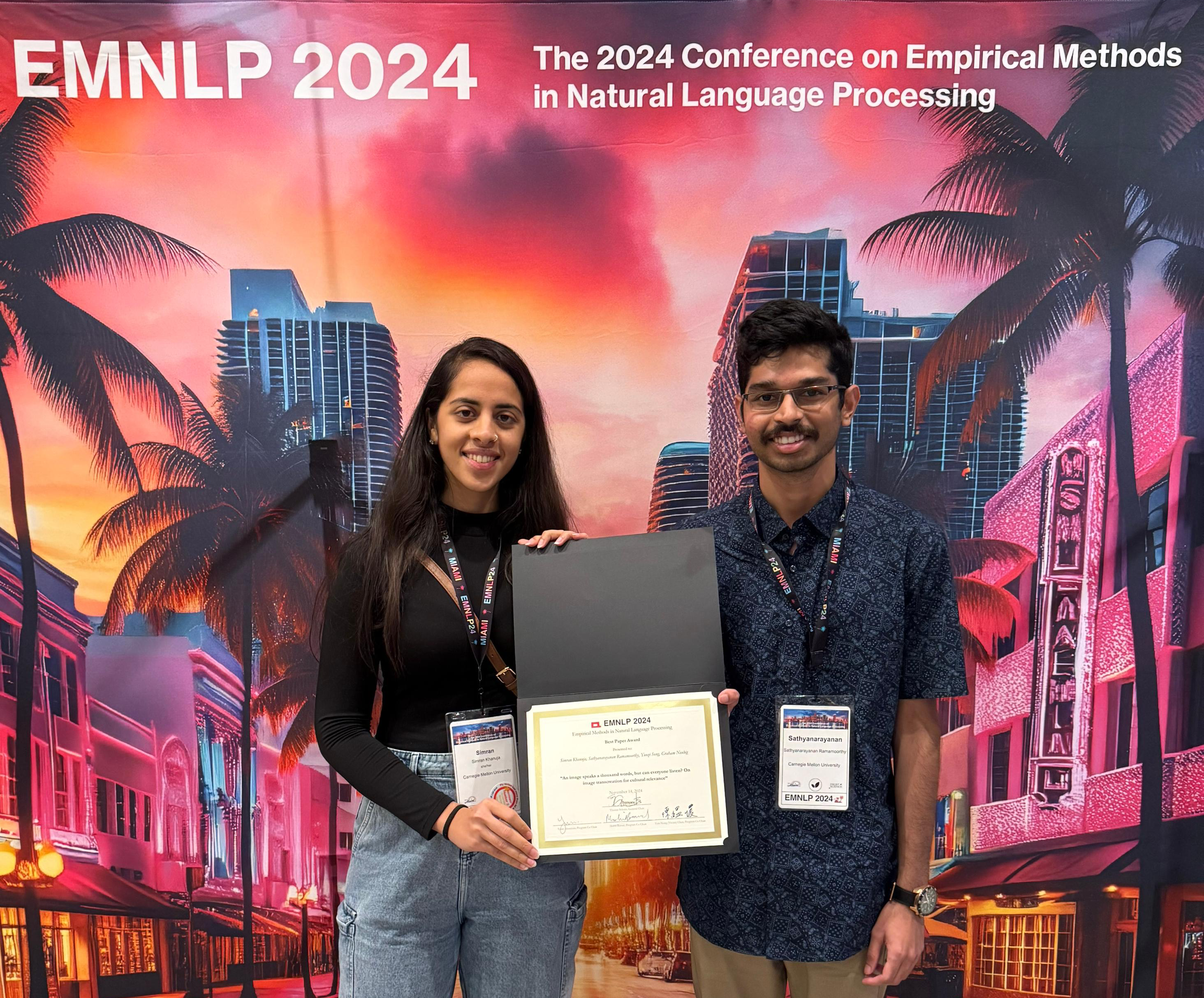

LTI Ph.D. student Simran Khanuja won for her paper, “An Image Speaks a Thousand Words, but Can Everyone Listen? On Image Transcreation for Cultural Relevance.” Khanuja’s adviser, LTI Associate Professor Graham Neubig, co-authored the paper, along with LTI Research Associate Sathyanarayanan Ramamoorthy, who graduated from the Master of Computational Data Science program; and Yueqi Song, a student enrolled in CMU's Fifth Year Master's Program in computer science.

William Chen, also an LTI Ph.D. student, won for his paper, “Toward Robust Speech Representation Learning for Thousands of Languages,” which was co-authored by his adviser, LTI Associate Professor Shinji Watanabe. Other co-authors included LTI Ph.D. students Jinchuan Tian, Jiatong Shi and Xuankai Chang; LTI Postdoctoral Researcher Soumi Maiti; and Wangyu Zhang of the University of Science and Technology of China, Yifan Peng of Cornell University, Xinjian Li of Google DeepMind, and Karen Livescu of Toyota Technological Institute at Chicago.

Khanuja’s paper focuses on localizing images using generative artificial intelligence — a process she's dubbed transcreation — introducing a new task and benchmark for the machine learning community. She described the task as “crucial for translating multimodal content,” and said that it will have real-world applications in areas including children’s education, advertising, television and film.

“I was overwhelmed and grateful for receiving this recognition,” Khanuja said after the announcement. “It was heartening to see the natural language processing community appreciate our efforts and openly welcome the novel, challenging and impactful problem that we introduce in this work.”

Chen’s research contributes to the field of self-supervised learning (SSL), which allows speech technologies to support a broader spectrum of human languages. Most speech models can only serve about 100 of the nearly 7,000 languages used worldwide. In their paper, Chen and his team introduce the Cross-Lingual Encoder for Universal Speech (XEUS), a tool trained on more than 1 million hours of speech data in more than 4,000 languages, representing a fourfold increase in the language coverage of current SSL models.

Watanbe views both the project and the award as affirmations of the power academic researchers have to make world-changing technological breakthroughs. “This is another example showing that an academic institution can compete with a tech giant,” he said.

EMNLP bills itself as "one of the most prominent conferences in the field of natural language processing." This year’s event, which featured 33 papers from LTI researchers, took place Nov. 12–16 in a hybrid format, with in-person events occurring in Miami.